Background and context about using S3 for backup:

There are many sites that show how to backup the Synology device to AWS S3. Synology themselves posts numerous articles. I’m more interested in using S3 for off-site, long-term, semi-permanent, “ransomware-proof”, backup of my critical (irreplaceable) data. This is in addition having my entire Synology mirrored to a 14TB external HDD via USB (which rotates offsite), and another copy of critical data on my local server(s).

What’s your threat model?

My primary threat model is user-caused accidental deletion. A very close second is longer-term APT/malware. Exploring the ‘grayer’ parts of the Internet can lead to interesting circumstances, and “learning by doing” also offers opportunities for mistakes.

Today’s malware isn’t always in your face. It’s data harvesting, typically with a goal of financial gain. I want my data to be secure enough that I don’t have to worry about losing my local machine, local server, or my house. I don’t want to worry about ransomware, malware, or natural disasters – especially theft or fire.

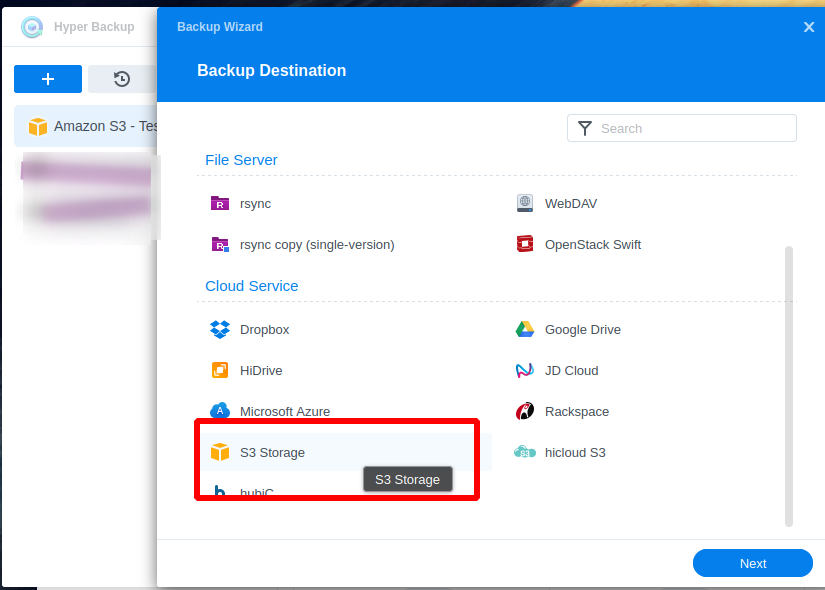

Hyper Backup is very straight-forward.

To use it to backup to S3, select S3, enter credentials/locations, then pick the backup settings you want.

Use AWS IAM to setup a user, with a minimal access policy, set credentials in HyperBackup for this specific S3 task, then adjust the backup settings as necessary.

What about the IAM Policy to use for backup?

If you’re here, and you know me, you know I’m all about securing this as best as possible. The first question I had was “what is the minimal access policy an account needs for successful backup”? After Googling, I ended up on this site, but I was wondering if I really needed to allow object deletion and other things. I also was curious if this could work with only a specific bucket, and also if it supported S3 Object Lock (write-once, read-many). As of this post, my current policy matches that post, but I’ve copied it here for your reference.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:ListAllMyBuckets",

"s3:ListBucket"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObjectAcl",

"s3:GetObject",

"s3:GetLifecycleConfiguration",

"s3:PutBucketLogging",

"s3:PutLifecycleConfiguration",

"s3:GetBucketLogging",

"s3:CreateBucket",

"s3:DeleteObject",

"s3:GetBucketAcl",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::BUCKET-NAME/*",

"arn:aws:s3:::BUCKET-NAME"

]

}

]

}How to use S3 Object Lock for a Very Secure backup strategy?

What I’m actually interested in, and couldn’t find with Googling, is how to make Hyper Backup able to backup to a bucket that has Object Lock enabled, which will effectively keep the data safe, even in the case of ransomware or data-corruption due to malware (locally or on the Synology itself).

Unfortunately – with all my experimentation and research completed, it appears that you cannot use something like AWS-ObjectLock in conjunction with HyberBackup to be more ‘ransomware-resistant’. However, the need for this is mitigated by file versioning and life-cycle rules, both within HyperBackup (so you can restore non-ransomewared, aka non-encrypted files even if you already backed up encrypted ones), and AWS-native rules, so that the backup from HyperBackup is also duplicated via a service-type account, where the HyperBackup AWS IAM API key can’t access the other bucket that contains data via the life-cycle rule.

Other considerations and questions.

- Does Synology Hyper Backup even work in conjunction with Object Lock?

- I was also wondering if it was better to let Hyper Backup handle versioning, or S3. In other words, always have Hyper Backup write/send/overwrite whatever (basically one-way forced backup), and have S3 handle the versioning and future restoration, or have Hyper Backup handle it.

- I think for ease-of-restoration, you’ll want Hyper Backup to version, and S3 just to allow overwriting. Maybe S3 can store a version, but I don’t think you want both systems versioning things.

- Seems like it has to be Hyper Backup – especially since “Hyper Backup Explorer” will force a download of the entire backup, and we can’t just browse S3 for a specific file (since it’s all proprietary, and encrypted).

- Also, since the format of Hyper Backup is proprietary, I suppose it handling file versioning will actual enable version-based restore, instead of recovery.

- The flip-side here is that if we’re using Object Lock, AWS forces versioning on the S3 bucket, and I’m really just interested in this data not being lost – not necessarily restoring a specific version from 38 days ago.

- https://www.reddit.com/r/backblaze/comments/qj1mbs/backblaze_synology_hyperbackup_and_file_lifecycle/

- How long should we leave Object Lock in place?

- How do we handle file changes?

As a new policy – I’m going to start publishing my posts, even if they aren’t necessarily in an ideally complete state. As they say, “the perfect is the enemy of the good” – and getting some information out on the Internet is better than nothing! Feel free to comment if you want more info/updates or just to offer feedback. Thank you!